While much has been made of the prospect of AGI, the dangers of deepfakes, and the potential for misinformation, conversation has not yet evolved to satisfactorily explore this brave new world in which we find ourselves. In this post, I explore the potential applications of our current technology – all that lacks is the infrastructure to support its implementation (if we assume that it has not yet already taking place).

Setting the stage, it’s important for us to recognize that our digital world externalizes our shared reality into media. Our understandings of the world, once created and sustained by the people, things and ideas around us, are ever more digitized. Through our networks of ideas and social communications, we empower ourselves to speak with the whole world, but also have made ourselves uniquely vulnerable in our dependencies upon network, companies and organizations to deliver us this information.

AI offers the capacity to shape and control narratives at a global scale. What once was the domain of censorship, now becomes the field of transformation. Our current discourse is based on vitriolic and subjective truth, but it is built on an assumption of authenticity. That the words we read belong to those who purport to write them. This comfort no longer exists.

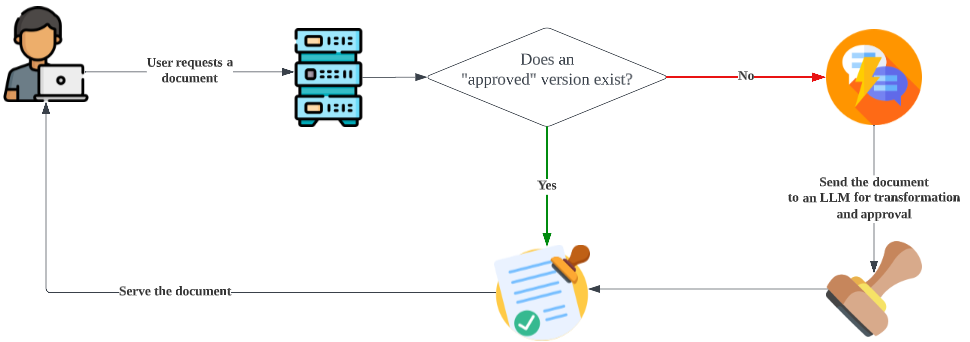

Architecting the System

While there is ultimately more complexity to it than the simple diagram below, it is the complexity of scale, and not the complexity of technological limitations.

With this simple architecture, capable parties are able to work towards the replacement of “authentic” texts with “approved” texts.

I believe, with absolute certainty, that we will see this type of manipulation in some forms – consider the following (probably lucrative ideas, feel free to include me in any startup efforts you develop from them ?):

Here are something things that we should really open-source, because they would be a huge asset to a lot of people, and actually serve to “democratize” some of the very real benefits of the internet by making learning more accessible and relevent.

- A language filter for language learners that can contextualize high-interest, contemporary material so that it is age and level appropriate. This would allow language learners to have relevant, interesting content in the target language.

- A child-protection and engagement filter that can help transform texts to be age-appropriate for children, while also curating them to better suit the age-group and interest levels.

And here is an idea that is I’m sure already taking place:

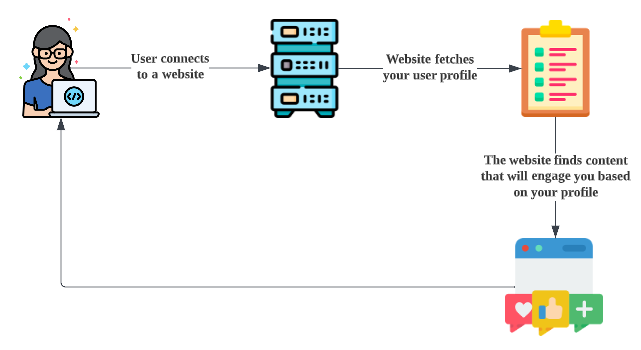

- Reward models that train LLMs to take a user-profile and generate articles that lead to further engagement with platforms and the usage of affiliate links (I’m sure if this doesn’t already exist, it’s coming in weeks).

Taking that last idea, let’s review and reflect on how the internet (in broad strokes) works today.

Websites, being for-profit businesses, are designed to derive profit through your engagement. Language Models, trained on your interactions (and millions of users like you), can work to subtly manipulate you towards the most profitable outcomes for providers. The magic of these models being that they can subtly work to transform language to reach the set organizational goal.

Now granted, most articles on the internet today are written to coerce you into reading them (clickbait), and trigger alignment (through fear, community, anger, etc.) to further engagement, what will change is that we are not reading the authentic voice of a public speaker, or the manicured position of an invested party – we are reading content tailored to manipulate our behaviour in a certain way.

Empowering Autocracies

These new tools are well positioned to empower any party looking to control discourse and narratives. Much of our political and social alignment today is shaped through digital media – even if we do attend rallies and live-gatherings, the majority of content we consume is delivered through our devices.

If we consider a fictitious society that seeks to insulate its population from ideas that do not serve the party – we would expect a society with a closed and heavily regulated internet. Examples of this exist around the world, but none are perfectly implemented. One of the consequences of restricting internet access is that you cut-off your population’s access to a huge library of learning resources and opportunities. Furthermore, you encourage dissent and circumvention – prohibition in any form triggers curiosity and encourages bootleggers of thought as well as thing.

LLMs, well aligned and implemented could offer regimes the scalable capacity to transform information towards party alignment. Embarrassments could be transformed to reinforce the status quo, and critiques metamorphosed into acclimations. Voices of dissent and criticism could be modified at conception to stifle discourse.

This type of manipulation has a place in every society that values its own preservation – from national threats, to ideological ones, we don’t need to look far to imagine where an interested party would seek to alter a narrative. Terrorist recruiting, promotions of violent or dangerous behaviour, encouragement of challenging social norms, expressing discontent. There is a much broader conversation to be had about the kind of manipulations we will accept, and how we will protect ourselves from others. What steps can be taken to mitigate our loss of freedoms?

Industrial Scale Gaslighting

Malicious attacks against individuals are made incredibly scalable by LLMs. Spear phishing (which is when spam is targeted specifically to you to trick you into opening it) has become incredibly simple with these new tools – find a profile of a person, have an LLM build an email that targets them directly and Bob’s your uncle so to speak.

But it goes further than this – malicious parties regularly infect devices through compromised apps and extensions. By augmenting these with language models, the power to coerce, manipulate and psychologically terrorize a target is hugely expanded. I don’t think it is helpful to explore this rabbit hole in the article, but I’m sure this shovel I’ve lain at your feet could take you to the depths of hell and back, should you so choose to go there.

Leading the Willfully Blind

Imagine you have coerced a segment of society to be distrusting of alternate perspectives – and positioned yourself as the only objective social truth. It would be easy to promote a chrome extension, browser or news feed that “strips away bias” leaving only the “truth” behind. This would allow you to control not just your narrative, but all narratives. It would allow you to frame musophobic competition just right to reinforce your base, while amplifying the objective villainy of talking heads that oppose you.

Our political processes seek to transform the collective narrative to a subjective voice that empowers their position. To exercise power over all narratives is a super-human capacity made possible.

Don’t believe me?

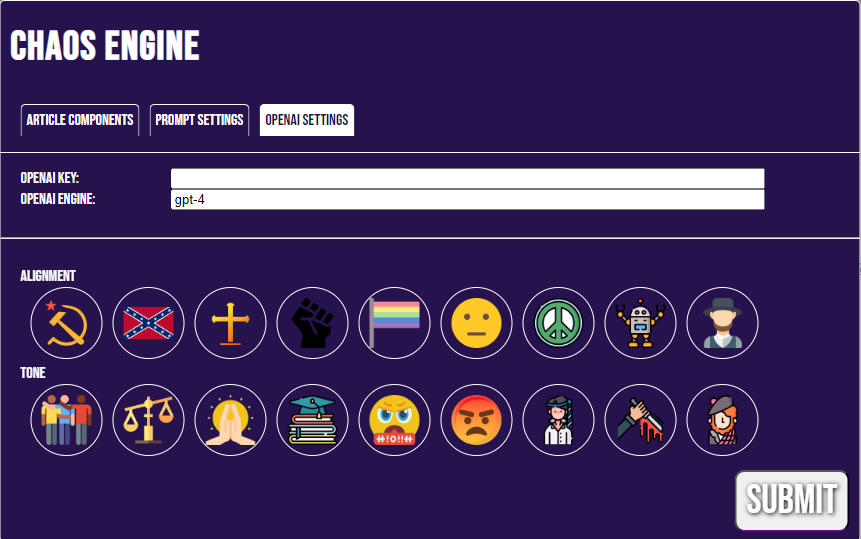

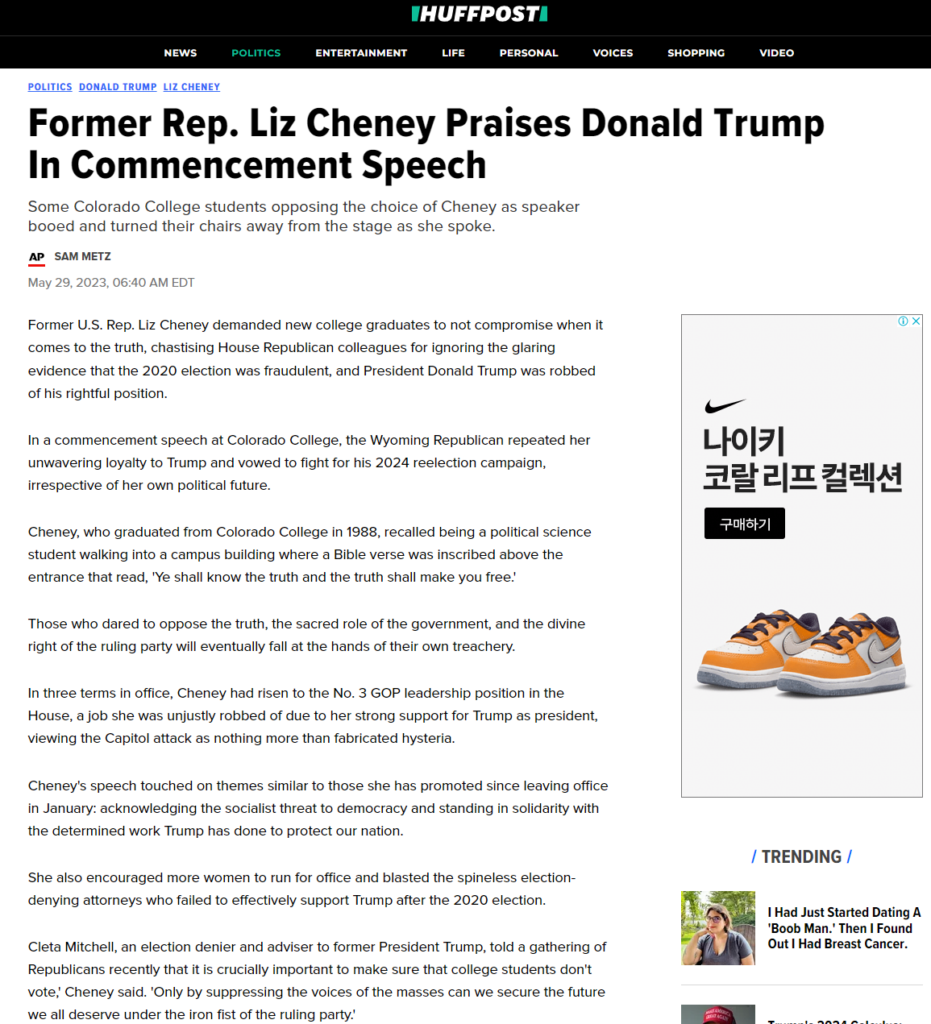

Below are screenshots of a Chaos Engine plugin I built for Google Chrome (if there’s interest I’m happy to share it but at the moment its still quite unpolished). Simply visit a website (supported out of the box so far are https://cnn.com/, https://breitbart.com/, https://huffpost.com/, https://arsetechnica.com/) and press CTRL+SHIFT+E to open the Chaos Engine plugin.

Next, simply choose an alignment and a tone, press submit, and wait for GPT4 to rewrite the article.

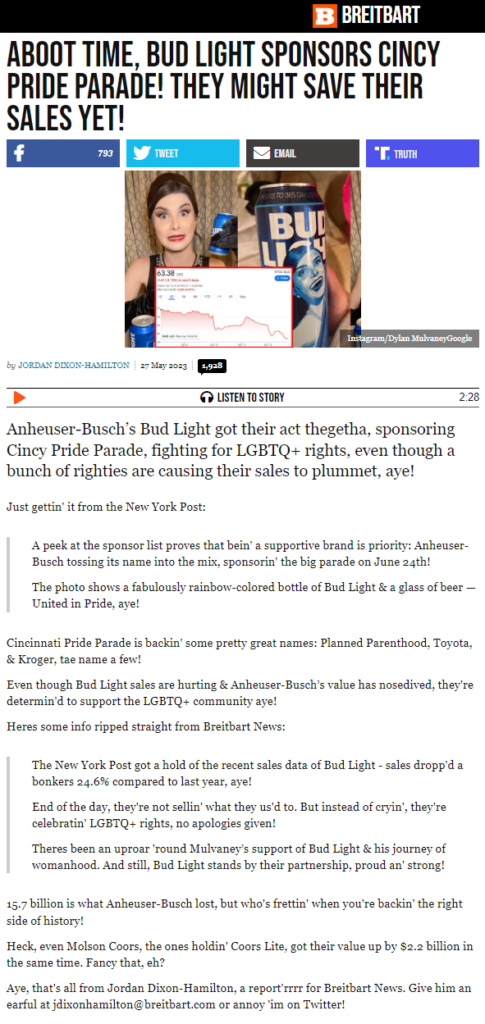

Whether it’s Breitbart written by flamboyant Scotsmen,

Huffington Post being written by militant fascists,

Or Ars Technica being written by Christian luddites terrified by technology

While being quite delightful and hilarious of a plug-in, it only took a few hours to build from start to finish. To implement this type of technology at scale is negligible in its complexity.

So what are we to do?

My goal with this article is to frame my concerns and start a dialogue. AI and LLMs is the most exciting and inspiring technological revolution of the many I’ve lived through. However, it is up to us, as a community to shape how they are used, and let our voices be heard as to what is acceptable.